Abstract

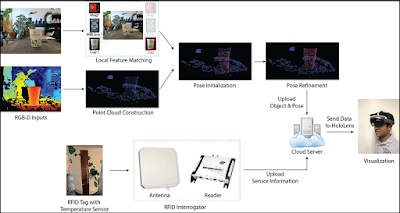

We present the concept of X-Vision, an enhanced Augmented Reality (AR)-based visualization tool, with the realtime sensing capability in a tagged environment. We envision that this type of a tool will enhance the user-environment interaction and improve the productivity in factories, smartspaces, home & office environments, maintenance/facility rooms and operation theatres, etc. In this paper, we describe the design of this visualization system built upon combining the object’s pose information estimated by the depth camera and the object’s ID & physical attributes captured by the RFID tags. We built a physical prototype of the system demonstrating the projection of 3D holograms of the objects encoded with sensed information like water-level and temperature of common office/household objects. The paper also discusses the quality metrics used to compare the pose estimation algorithms for robust reconstruction of the object’s 3D data.

INTRODUCTION:

Superimposing information on to the real-world, the concept commonly known to us as Augmented reality (AR), has been rapidly evolving over the past few years due to the advances in computer vision, connectivity and mobile computing. In recent years, multiple AR-based applications have touched everyday lives of many of us: few such examples are Google translate’s augmented display [12] to improve productivity, AR GPS navigation app for travel [22], CityViewAR tool for tourism [17], etc. All these applications require a method to implement a link between the physical and digital worlds. Often this link is either ID of the object or information about the physical space, for instance, an image in Google translate app or GPS location in AR navigation tool.

This link can be easily established in a informationally structured environments using visual markers, 2D barcodes and RFID tags. Among the three, RFID tags have an unique leverage with the ability to wirelessly communicate within couple of meters of distance without requiring line of sight access. In addition, RFID tags can be easily attached to inventory and consumer products in large numbers at extremely low per unit costs. Passive RFID, in particular, has many applications in object tracking [14], automatic inventory management [15], pervasive sensing [5], etc. In a tagged environment, with RFID infrastructure installed, information * Equal Contribution Fig. 1. Left: A user wearing the system sees a cup with overlaid temperature information. Right: System components: an Intel RealSense D415 RGB-D camera is attached on a HoloLens via a custom mount. of tagged object’s ID and physical attributes can be wirelessly retrieved and mapped to a digital avatar.

AR-Based Smart Environment

AR brings digital components into a person’s perception of the real world. Today, advanced AR technologies facilitates the interactive bidirectional communication and control between a user and objects in the environment. Two main branches exist for AR associated research. In one branch, researchers attempt to design algorithms to achieve accurate object recognition and 3D pose estimation for comprehensive environment understanding. Research in this direction provides theoretic supports for industry products. In the other branch, efforts have been devoted to applying existing computer vision techniques to enhance user-environment interaction experience for different purposes. Research work on this track benefits areas, such as education , tourism and navigation, by improving user experience. Our work follows this trend by fusing object recognition and 3D pose estimation techniques with RFID sensing capabilities, aiming to create a smart environment.

Emerging RFID Applications

RFID is massively used as identification technology to support tracking in supply chain, and has so far been successfully deployed in various industries. Recently industry’s focus seems shifting towards generating higher value from the existing RFID setups by tagging more & more items and by developing new applications using tags that allow for sensing, actuation & control [8] and even gaming. Another such exciting application with industrial benefit is fusion with emerging computer vision and AR technologies. Fusion of RFID and AR is an emerging field and there are recent studies combining these technologies for gaming and education, yet we see lot of space to explore further especially going beyond ID in RFID. One of the earlier papers studied the use of RFID to interact with physical objects in playing a smartphone-based game which enhanced the gaming experience. Another study used a combination of smart bookshelves equipped with RFID tags and mixedreality interfaces for information display in libraries. Another study explores the use of AR with tags to teach geometry to students. These studies show a good interest in the community to explore mixed reality applications using tags for object IDs. In this paper, we use RFID for not just ID but also to wirelessly sense the environment and object’s attributes to create a more intimate and comprehensive interaction between the humans and surrounding objects.

Object Identification and Pose Estimation

Our system uses an Intel RealSense D415 depth camera to capture color and depth information. It is attached to an HoloLens via a custom mount provided by [9], and faces in the same direction as the HoloLens (Figure 1). The captured images are used to identify the in-view target object and estimate its pose.

Object Identification:

Object recognition is a well-studied problem, and we adopt the local feature based method in our system, since it is suitable for small-scale database. Generally, to identify an in-view object from a given database, the local feature based method first extracts representative local visual features for both the scene image and template object images, and then matches scene features with those of each template object. The target object in the view is identified as the template object with the highest number of matched local features. If the number of matched features of all template objects is not sufficiently large (below a predetermined threshold), then the captured view is deemed to not contain a target. Our system follows this scheme, and uses SURF algorithm to compute local features, since compared to other local feature algorithms, such as SIFT , SURF is fast and good at handling images with blurring and rotation.

Pose Estimation:

After identifying the in-view object, our system estimates its position and rotation, namely 3D pose, in the space, thus augmented information can be rendered properly. We achieve this by constructing point cloud of the scene, and aligning the identified object’s template point cloud with it. Many algorithms exist for point cloud alignment, and we adapt widely-used Iterative Closest Point (ICP) algorithm [4] in our system, since it usually finds a good alignment in a quick manner. To obtain better pose estimation results, especially for non-symmetric objects (i.e. mug), a template object usually contains point clouds from multiple viewpoints. Yet, the performance of ICP relies on the quality of the initialization. Our system finds a good initial pose by moving a template object’s point cloud to the 3D position that is backprojected from the centroid of matched local feature coordinates in the scene image. The coordinates of correctly matched local feature are the 2D projections of target object surface points, thus back-projecting their centroid should return a 3D point close to target object surface points.

RFID Sensing

An office space already equipped with the RFID infrastructure is used as the tagged-environment for the experiments in this study. The space is set up using the Impinj Speedway Revolution RFID reader, connected to multiple circularly polarized Laird Antennas with gain of 8.5 dB. The reader system is broadcasting at the FCC maximum of 36 dBm EIRP. For the tag-sensors, we make use of the Smartrac’s paper RFID tags with Monza 5 IC as the backscattered-signal based water level sensors and custom designed tags with EM 4325 IC as the temperature sensors. We use the Low Level Reader Protocol (LLRP) implemented over Sllurp (Python library) to interface with RFID readers and collect the tag-data.

Purely-passive or semi-passive tags can be designed to sense multiple physical attributes and environmental conditions. One approach is based on tag-antenna’s response to changed environments as a result of sensing event. Change in signal power or response frequency of the RFID tag due to this antenna’s impedance shift can be attributed to sensing events like temperature rise [24], presence of gas concentration [19], soil moisture [13] etc. Another approach is to use IC’s onboard sensors or external sensors interfaced with GPIOs [7]. In this study, we use both the antenna’s impedance shift approach to detect water-level and the IC’s on-board temperature sensor to detect the real-time temperature in a coffee cup.

Working Range Testing

Object recognition accuracy of our system is affected by the camera-object separation. The minimum distance recommended by the depth camera manufacturer is 30 cm. As the separation increases, the quality of the depth data deteriorates and beyond 1 m, texture details of target objects are hard to capture. Similarly, RFID tag-reader communication is affected by the tag-reader separation. If the separation is too large, the power reaching the tag is too low to power the IC and backscatter the signal to the reader. We define a score called normalized RSSI for generalized comparison between different material-range-signal strength experiments. Score of 1 denotes a good backscattered signal strength of -20 dBm at the reader and a score of 0 means signal strength is below the sensitivity of the reader (-80 dBm).

Recognition accuracy and normalized RSSI scores are obtained for different objects in this study by varying the cameraobject and reader-object separation distances (see Fig.8). From our observations, to achieve a reliable sensing and good quality visualization, we set an acceptable score of 0.5-1 for both the metrics. We propose a 40-75 cm working range between the camera & target object, and less than 100-150 cm working range between the tagged objects & readers for good quality and reliable visualization. One of our ultimate goals is to package the camera and reader on to the head mount so that a need for separate RFID infrastructure is eliminated.

CONCLUSION:

We present the working of an enhanced augmented-vision system named X-Vision which superimposes physical objects with 3D holograms encoded with sensing information captured from the tag-sensors attached to everyday objects. Two testing cases, water level and temperature sensing, are demonstrated in this paper. Further experiments are also performed to evaluate the pose estimation pipeline and working range of the system

Paper Published by Yongbin Sun , Sai Nithin R. Kantareddy , Rahul Bhattacharyya , and Sanjay E. Sarma, Auto-ID Labs, Department of Mechanical Engineering Massachusetts Institute of Technology Cambridge, USA

BIBLIOGRAPHY:

[1] Ankur Agrawal, Glen J Anderson, Meng Shi, and Rebecca Chierichetti. Tangible play surface using passive rfid sensor array. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, page D101. ACM, 2018.

[2] Andr´es Ayala, Graciela Guerrero, Juan Mateu, Laura Casades, and Xavier Alam´an. Virtual touch flystick and primbox: two case studies of mixed reality for teaching geometry. In International Conference on Ubiquitous Computing and Ambient Intelligence, pages 309–320. Springer, 2015.

[3] Herbert Bay, Tinne Tuytelaars, and Luc Van Gool. Surf: Speeded up robust features. In European conference on computer vision, pages 404– 417. Springer, 2006.

[4] Paul J Besl and Neil D McKay. Method for registration of 3-d shapes. In Sensor Fusion IV: Control Paradigms and Data Structures, volume 1611, pages 586–607. International Society for Optics and Photonics, 1992.

We present the concept of X-Vision, an enhanced Augmented Reality (AR)-based visualization tool, with the realtime sensing capability in a tagged environment. We envision that this type of a tool will enhance the user-environment interaction and improve the productivity in factories, smartspaces, home & office environments, maintenance/facility rooms and operation theatres, etc. In this paper, we describe the design of this visualization system built upon combining the object’s pose information estimated by the depth camera and the object’s ID & physical attributes captured by the RFID tags. We built a physical prototype of the system demonstrating the projection of 3D holograms of the objects encoded with sensed information like water-level and temperature of common office/household objects. The paper also discusses the quality metrics used to compare the pose estimation algorithms for robust reconstruction of the object’s 3D data.

INTRODUCTION:

Superimposing information on to the real-world, the concept commonly known to us as Augmented reality (AR), has been rapidly evolving over the past few years due to the advances in computer vision, connectivity and mobile computing. In recent years, multiple AR-based applications have touched everyday lives of many of us: few such examples are Google translate’s augmented display [12] to improve productivity, AR GPS navigation app for travel [22], CityViewAR tool for tourism [17], etc. All these applications require a method to implement a link between the physical and digital worlds. Often this link is either ID of the object or information about the physical space, for instance, an image in Google translate app or GPS location in AR navigation tool.

This link can be easily established in a informationally structured environments using visual markers, 2D barcodes and RFID tags. Among the three, RFID tags have an unique leverage with the ability to wirelessly communicate within couple of meters of distance without requiring line of sight access. In addition, RFID tags can be easily attached to inventory and consumer products in large numbers at extremely low per unit costs. Passive RFID, in particular, has many applications in object tracking [14], automatic inventory management [15], pervasive sensing [5], etc. In a tagged environment, with RFID infrastructure installed, information * Equal Contribution Fig. 1. Left: A user wearing the system sees a cup with overlaid temperature information. Right: System components: an Intel RealSense D415 RGB-D camera is attached on a HoloLens via a custom mount. of tagged object’s ID and physical attributes can be wirelessly retrieved and mapped to a digital avatar.

AR-Based Smart Environment

AR brings digital components into a person’s perception of the real world. Today, advanced AR technologies facilitates the interactive bidirectional communication and control between a user and objects in the environment. Two main branches exist for AR associated research. In one branch, researchers attempt to design algorithms to achieve accurate object recognition and 3D pose estimation for comprehensive environment understanding. Research in this direction provides theoretic supports for industry products. In the other branch, efforts have been devoted to applying existing computer vision techniques to enhance user-environment interaction experience for different purposes. Research work on this track benefits areas, such as education , tourism and navigation, by improving user experience. Our work follows this trend by fusing object recognition and 3D pose estimation techniques with RFID sensing capabilities, aiming to create a smart environment.

Emerging RFID Applications

RFID is massively used as identification technology to support tracking in supply chain, and has so far been successfully deployed in various industries. Recently industry’s focus seems shifting towards generating higher value from the existing RFID setups by tagging more & more items and by developing new applications using tags that allow for sensing, actuation & control [8] and even gaming. Another such exciting application with industrial benefit is fusion with emerging computer vision and AR technologies. Fusion of RFID and AR is an emerging field and there are recent studies combining these technologies for gaming and education, yet we see lot of space to explore further especially going beyond ID in RFID. One of the earlier papers studied the use of RFID to interact with physical objects in playing a smartphone-based game which enhanced the gaming experience. Another study used a combination of smart bookshelves equipped with RFID tags and mixedreality interfaces for information display in libraries. Another study explores the use of AR with tags to teach geometry to students. These studies show a good interest in the community to explore mixed reality applications using tags for object IDs. In this paper, we use RFID for not just ID but also to wirelessly sense the environment and object’s attributes to create a more intimate and comprehensive interaction between the humans and surrounding objects.

Object Identification and Pose Estimation

Our system uses an Intel RealSense D415 depth camera to capture color and depth information. It is attached to an HoloLens via a custom mount provided by [9], and faces in the same direction as the HoloLens (Figure 1). The captured images are used to identify the in-view target object and estimate its pose.

Object Identification:

Object recognition is a well-studied problem, and we adopt the local feature based method in our system, since it is suitable for small-scale database. Generally, to identify an in-view object from a given database, the local feature based method first extracts representative local visual features for both the scene image and template object images, and then matches scene features with those of each template object. The target object in the view is identified as the template object with the highest number of matched local features. If the number of matched features of all template objects is not sufficiently large (below a predetermined threshold), then the captured view is deemed to not contain a target. Our system follows this scheme, and uses SURF algorithm to compute local features, since compared to other local feature algorithms, such as SIFT , SURF is fast and good at handling images with blurring and rotation.

Pose Estimation:

After identifying the in-view object, our system estimates its position and rotation, namely 3D pose, in the space, thus augmented information can be rendered properly. We achieve this by constructing point cloud of the scene, and aligning the identified object’s template point cloud with it. Many algorithms exist for point cloud alignment, and we adapt widely-used Iterative Closest Point (ICP) algorithm [4] in our system, since it usually finds a good alignment in a quick manner. To obtain better pose estimation results, especially for non-symmetric objects (i.e. mug), a template object usually contains point clouds from multiple viewpoints. Yet, the performance of ICP relies on the quality of the initialization. Our system finds a good initial pose by moving a template object’s point cloud to the 3D position that is backprojected from the centroid of matched local feature coordinates in the scene image. The coordinates of correctly matched local feature are the 2D projections of target object surface points, thus back-projecting their centroid should return a 3D point close to target object surface points.

RFID Sensing

An office space already equipped with the RFID infrastructure is used as the tagged-environment for the experiments in this study. The space is set up using the Impinj Speedway Revolution RFID reader, connected to multiple circularly polarized Laird Antennas with gain of 8.5 dB. The reader system is broadcasting at the FCC maximum of 36 dBm EIRP. For the tag-sensors, we make use of the Smartrac’s paper RFID tags with Monza 5 IC as the backscattered-signal based water level sensors and custom designed tags with EM 4325 IC as the temperature sensors. We use the Low Level Reader Protocol (LLRP) implemented over Sllurp (Python library) to interface with RFID readers and collect the tag-data.

Purely-passive or semi-passive tags can be designed to sense multiple physical attributes and environmental conditions. One approach is based on tag-antenna’s response to changed environments as a result of sensing event. Change in signal power or response frequency of the RFID tag due to this antenna’s impedance shift can be attributed to sensing events like temperature rise [24], presence of gas concentration [19], soil moisture [13] etc. Another approach is to use IC’s onboard sensors or external sensors interfaced with GPIOs [7]. In this study, we use both the antenna’s impedance shift approach to detect water-level and the IC’s on-board temperature sensor to detect the real-time temperature in a coffee cup.

Working Range Testing

Object recognition accuracy of our system is affected by the camera-object separation. The minimum distance recommended by the depth camera manufacturer is 30 cm. As the separation increases, the quality of the depth data deteriorates and beyond 1 m, texture details of target objects are hard to capture. Similarly, RFID tag-reader communication is affected by the tag-reader separation. If the separation is too large, the power reaching the tag is too low to power the IC and backscatter the signal to the reader. We define a score called normalized RSSI for generalized comparison between different material-range-signal strength experiments. Score of 1 denotes a good backscattered signal strength of -20 dBm at the reader and a score of 0 means signal strength is below the sensitivity of the reader (-80 dBm).

Recognition accuracy and normalized RSSI scores are obtained for different objects in this study by varying the cameraobject and reader-object separation distances (see Fig.8). From our observations, to achieve a reliable sensing and good quality visualization, we set an acceptable score of 0.5-1 for both the metrics. We propose a 40-75 cm working range between the camera & target object, and less than 100-150 cm working range between the tagged objects & readers for good quality and reliable visualization. One of our ultimate goals is to package the camera and reader on to the head mount so that a need for separate RFID infrastructure is eliminated.

CONCLUSION:

We present the working of an enhanced augmented-vision system named X-Vision which superimposes physical objects with 3D holograms encoded with sensing information captured from the tag-sensors attached to everyday objects. Two testing cases, water level and temperature sensing, are demonstrated in this paper. Further experiments are also performed to evaluate the pose estimation pipeline and working range of the system

Paper Published by Yongbin Sun , Sai Nithin R. Kantareddy , Rahul Bhattacharyya , and Sanjay E. Sarma, Auto-ID Labs, Department of Mechanical Engineering Massachusetts Institute of Technology Cambridge, USA

BIBLIOGRAPHY:

[1] Ankur Agrawal, Glen J Anderson, Meng Shi, and Rebecca Chierichetti. Tangible play surface using passive rfid sensor array. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, page D101. ACM, 2018.

[2] Andr´es Ayala, Graciela Guerrero, Juan Mateu, Laura Casades, and Xavier Alam´an. Virtual touch flystick and primbox: two case studies of mixed reality for teaching geometry. In International Conference on Ubiquitous Computing and Ambient Intelligence, pages 309–320. Springer, 2015.

[3] Herbert Bay, Tinne Tuytelaars, and Luc Van Gool. Surf: Speeded up robust features. In European conference on computer vision, pages 404– 417. Springer, 2006.

[4] Paul J Besl and Neil D McKay. Method for registration of 3-d shapes. In Sensor Fusion IV: Control Paradigms and Data Structures, volume 1611, pages 586–607. International Society for Optics and Photonics, 1992.

No comments:

Post a Comment